Facebook drops the ball on livestreamed suicide

Real-life media violence is desensitizing to internet users

Ronnie McNutt, screenshotted here, took his own life with a shotgun in a Facebook livestream he filmed in front of a desk in his home on Aug. 31. Since the livestream, the video has gone viral and social platforms have scrambled to have it removed. (Best Gore screenshot)

Imagine you are on TikTok casually scrolling through and viewing videos on the “For You” page, not expecting anything out of the ordinary, just the daily dose of laughs, courtesy of TikTok’s millions of users who upload content regularly. Now imagine, a new video automatically starts playing. Because of the music and the editing, it looks like any other video – but then the man in the video hangs up the phone, raises up a shotgun and blows his own face off.

Yes, this actually happened to millions of TikTok and Instagram users while using the apps, and no, they could not control the video appearing virtually out of nowhere; and Facebook is entirely to blame.

The man described above was 33-year-old Ronnie McNutt, a U.S. Army veteran living in Mississippi, who suffered from Post Traumatic Stress Disorder (PTSD) after serving tours in the Iraq War, according to the news platform, Heavy, that covered the aftermath of McNutt’s suicide.

McNutt went on a rant and livestreamed his own suicide by shooting via Facebook Live on Aug. 31. During and after the stream, Facebook did essentially nothing to remove the stream, and during that time, the video went viral and was shared a number of times on Facebook and then to other social media platforms including TikTok, Instagram and YouTube.

Facebook’s complacency and failure to act promptly is a prime example of why cybermedia is becoming an uncontrollable landscape for desensitization and is essentially a free-for-all on violent and inappropriate content.

Heavy reported that the video of McNutt’s gory suicide was not removed until two hours and 41 minutes after the shooting occurred, meaning any viewers of the stream could see nothing but McNutt’s lifeless body.

Following his death, the livestream was edited down and shared on various other social media platforms. Because of the algorithms, these social media sites have in place, the video was coming up on suggested video pages and would begin playing automatically without any warnings or censoring.

What is unfortunate is Facebook and other social platforms were more than capable of preventing this stream from being viewed and going viral. These sites can ban accounts and IP addresses if necessary. If YouTube can flag you for using a copyrighted song within a matter of seconds, they could definitely flag offensive content and remove it immediately, but Facebook failed to do so.

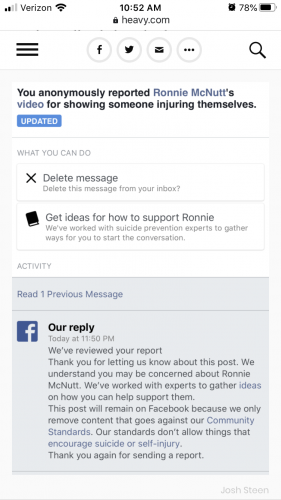

According to Heavy, the stream was originally reported to Facebook when McNutt was still alive by a friend of McNutt’s, Josh Steen when McNutt accidentally misfired his gun. Others were active in the comments trying to help. Police were called as well, but only stood outside his apartment complex watching the livestream, and essentially took no action, Heavy reported.

Steen said he contacted Facebook for hours asking them to remove the video, according to Heavy. McNutt had died by suicide at 10:30 p.m. and Facebook did not respond to Steen until 11:50 p.m.

Facebook’s ironic response read, “This post will remain on Facebook because we only remove content that goes against our Community Standards. Our standards don’t allow things that encourage suicide or self-injury.”

At this point, thousands of Facebook users had reported the video, requesting it be taken down.

According to Heavy, the video was not taken down until nearly 1:30 a.m., and by that time the video had already been shared multiple times to other social media sites. Facebook blamed COVID-19 for its slow response time and lack of customer service.

This is unacceptable on Facebook’s part and they need to be held legally responsible for the global spread of this video. No one’s filmed death should be sensationalized and distributed for entertainment purposes.

According to Heavy, Facebook did however release a statement saying “we are reviewing how we could have taken down the livestream faster,” but has yet to follow through with their claims.

Another issue Facebook has yet to acknowledge is all of the trolls and bots commenting on previous posts of McNutt’s leaving links and explanations on where to find the video of his suicide, contributing to the circulation. Although these comments have been reported multiple times, these comments do not seem to go against Facebook’s community guidelines or standards.

According to Heavy, Facebook has been criticized for its response to inappropriate and offensive livestreams in the past. In 2019, Facebook was heavily criticized for failing to respond to the mass murders in Christchurch, New Zealand, when a terrorist livestreamed himself murdering 50 Muslims, according to the Guardian, a British daily newspaper that covered the Christchurch attacks.

Facebook finally responded to the crisis after two weeks and only said they would “look into” implementing restrictions on livestreams,” according to the Guardian.

Sheryl Sandberg, Facebook’s chief operating officer, said the company would be “investing in research to build better technology to quickly identify edited versions of violent videos and images and prevent people from re-sharing these versions,” according to Heavy.

The company has yet to announce if any policy changes have been made.

Although Facebook removing the video immediately could have helped in slowing the video’s circulation, another major factor may have been responsible for its virality.

A recent Netflix documentary, “The Social Dilemma,” explains how algorithms are designed to suggest content for users based on their previously viewed content. The goal is to keep the user engaged with as much new content as possible.

What algorithm creators are realizing now, is that because algorithms are based in artificial intelligence, they will inevitably continue to evolve over time, and will be very difficult to control. Even the creators themselves don’t know what these algorithms are fully capable of.

This is why McNutt’s filmed suicide was showing up on TikTok’s “For You” trending homepage. An algorithm pushing this video onto a “For You” page makes it very difficult for users to avoid seeing the footage, since these videos are automatically played.

Since the video has been circulating through TikTok, the company is still frantically trying to have it completely removed. TikTok executives have claimed they will ban any accounts that are re-uploading McNutt’s video, according to the Sun, who covered TikTok’s response to the viral video in September.

TikTok has been one of the more active social media platforms in getting a handle on the video that has been circulating through their site since Sept. 6 and released a statement that read in part, “our systems have been automatically detecting and flagging these clips for violating our policies against content that displays, praised, glorifies, or promotes suicide,” the Sun reported.

Facebook needs to be held responsible for their failure to prevent the spread of this content since it led to other companies scrambling to clean up their mess.

Facebook’s failure to remove the video is one of the many factors contributing to desensitizing media users to violent content, since many of those that saw the video, viewed it against their virtual will.

All of this points to the even broader issue of a lack of censorship of violent content online. It is becoming easier to view violent content online. There are several websites specifically designed for the viewing of violent content for entertainment purposes, such as Best Gore, a Canadian shock site founded by Mark Marek.

This site advertises itself as a reality news website for adults only but is basically the Porn Hub of violent real-life content. It offers a massive collection of videos involving violence in multiple categories. These videos are shot on phone cameras or stolen from security camera footage, and then uploaded to the site, completely uncensored. You can access this site through a regular web browser.

The Canadian site was launched in 2008 and received harsh criticism when it hosted a real-life video of a murder. Since its launch, Marek pleaded guilty in 2016 by Canadian court authorities for uploading illegal content, according to Global News, a Canadian based news site that has been covering Marek’s arrests and sentencing since 2012. Despite Marek’s sentencing, the atrocious site is still up and running today by anonymous contributors.

McNutt’s video was found on this site, proving that the video’s online trail will never be completely erased. This is unfortunate for the friends and family of McNutt that will always struggle to remember him by a less chilling legacy.

As technology continues to advance, violent content will only become more accessible. What was once shocking and obscene to a regular internet user, will become the norm, because of the constant exposure to media violence. This is an unfortunate consequence of user-generated content.

Social media platforms have fallen asleep on media violence and its effect on innocent app users, and are now scrambling to clean up their mess. We can only hope they wake up and follow through with their standards, as they should’ve done from the get-go.

Jeriann Reincke • Mar 8, 2021 at 7:55 am

Seen it watch it done deal,reality tv